Difference between revisions of "Extension of the quadruped robot WARUGADAR"

| (One intermediate revision by the same user not shown) | |||

| Line 10: | Line 10: | ||

=== Dates === | === Dates === | ||

* Start date: May 2008 | * Start date: May 2008 | ||

| − | * End date: March 2009 | + | * End date: March 2009 |

=== People involved === | === People involved === | ||

| Line 48: | Line 48: | ||

== '''Part 3: Conclusion''' == | == '''Part 3: Conclusion''' == | ||

Warugadar can move in a structured environment independently and safely. | Warugadar can move in a structured environment independently and safely. | ||

| + | |||

| + | {{#ev:youtube|iuWaEtW__xc}} | ||

| + | *[http://www.youtube.com/watch?v=iuWaEtW__xc External link] | ||

Latest revision as of 14:29, 10 March 2009

Contents

Part 1: project profile

Project name

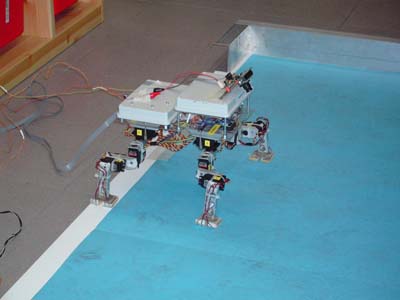

Extension of the quadruped robot WARUGADAR.

Project short description

- Warugadar is still far from being a complete functional autonomous robot; the robot would benefit from the introduction of any other kind of sensor device that could allow it to be more aware of the surrounding environment. We want integrate on WARUGADAR one camera to provide it of visual sensibility.

- The Warugadar interface was developed in Matlab, we would translate in Pyro to benefit open source software.

Dates

- Start date: May 2008

- End date: March 2009

People involved

Project head

- Prof. Giuseppina Gini

- Prof. Paolo Belluco

Students

Laboratory work and risk analysis

Laboratory work for this project will be mainly performed at AIRLab/Lambrate. It will include significant amounts of mechanical work as well as of electrical and electronic activity. Potentially risky activities are the following:

- Use of mechanical tools. Standard safety measures described in Safety norms will be followed.

Part 2: Project Description

Design choices

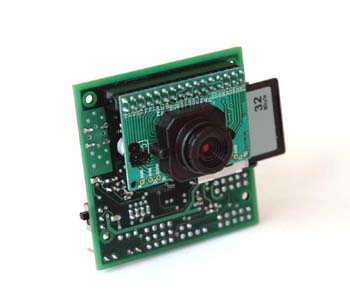

To implement the vision system, I use CMUcam3 (where CMU stands for Carnegie Mellon University of Pittsburgh, Pennsylvania).

CMUcam3 is not only a simple camera, but we can define it like an ARM7TDMI based fully programmable embedded computer vision sensor. The main processor is the Philips LPC2106 connected to an Omnivision CMOS camera sensor module with a maximun resolution of 352x288 pixels. Custom C code can be developed for the CMUcam3 using a port of the GNU toolchain along with a set of open source libraries and example programs. Executables can be flashed onto the board using the serial port with no external downloading hardware required.

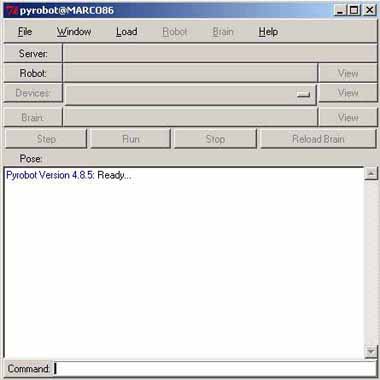

To implement the navigation system, I don't use the MATLAB interface developed by Cirillo Simone, but I use the interface developed in Pyro by my colleague Giovanni Alfieri. What is Pyro? Pyro stands for Python Robotics. The goal of the project is to provide a programming environment for easily exploring advanced topics in artificial intelligence and robotics without having to worry about the low-level details of the underlying hardware. Pyro is written in Python. Python is an interpreted language, which means that you can experiment interactively with your robot programs. In addition to being an environment, Pyro is also a collection of object classes in Python. Because Pyro abstracts all of the underlying hardware details, it can be used for experimenting with several different types of mobile robots and robot simulators. Pyro has the ability to define different styles of controllers, which are called the robot's brain. One unique characteristic of Pyro is the ability to write controllers using robot abstractions that enable the same controller to operate robots with vastly different morphologies.

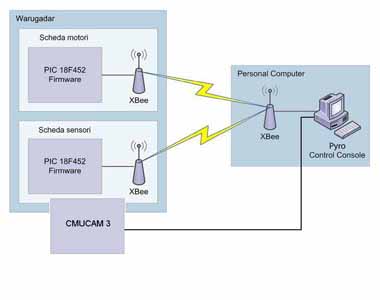

The image on the right shows the software architecture of the system.

Pratical Phase

- How the camera has been assembled on Warugadar;

- Implementation of the vision system in C. This code hes been flashed onto the board of the CMUcam3;

- Implementation of the navigation system in PYRO;

Part 3: Conclusion

Warugadar can move in a structured environment independently and safely.