Difference between revisions of "LARS and LASSO in non Euclidean Spaces"

(New page: {{ProjectProposal |title=LARS and LASSO in non Euclidean Spaces |image= lasso.jpg |description=LASSO \[1\] and more recently LARS \[2\] are two algorithms proposed for linear regression ta...) |

(No difference)

|

Revision as of 22:28, 16 October 2009

| Title: | LARS and LASSO in non Euclidean Spaces |

Image:lasso.jpg |

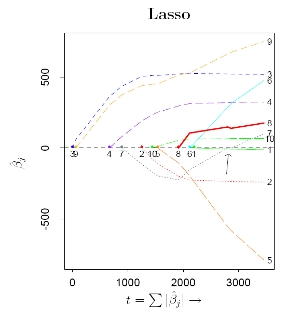

| Description: | [[prjDescription::LASSO \[1\] and more recently LARS \[2\] are two algorithms proposed for linear regression tasks. In particular LASSO solves a least-squares (quadratic) optimization problem with a constrain that limits the sum of the absolute value of the coefficients of the regression, while LARS can be considered as a generalization of LASSO, that provides a more computational efficient way to obtain the solution of the regression problem simultaneously for all values of the constraint introduced by LASSO.

One of the common hypothesis in regression analysis is that the noise introduced in order to model the linear relationship between regressors and dependent variable has a Gaussian distribution. A generalization of this hypothesis leads to a more general framework, where the geometry of the regression task is no more Euclidean. In this context different estimation criteria, such as maximum likelihood estimation and other canonical divergence functions do not coincide anymore. The target of the project is to compare the different solutions associated to different criteria, for example in terms of robustness, and propose generalization of LASSO and LARS in non Euclidean contexts. The project will focus on the understanding of the problem and on the implementation of different algorithms, so (C/C++ or Matlab or R) coding will be required. Since the project has also a theoretical flavor, mathematical inclined students are encouraged to apply. The project may require some extra effort in order to build and consolidate some background in math, especially in probability and statistics. Picture taken from \[2\] Bibliography \[1\] Tibshirani, R. (1996), Regression shrinkage and selection via the lasso. J. Royal. Statist. Soc B., Vol. 58, No. 1, pages 267-288 \[2\] Bradley Efron, Trevor Hastie, Iain Johnstone and Robert Tibshirani, Least Angle Regression, 2003]] | |

| Tutor: | MatteoMatteucci (matteo.matteucci@polimi.it), LuigiMalago (malago@elet.polimi.it) | |

| Start: | 2009/10/01 | |

| Students: | 1 - 2 | |

| CFU: | 20 - 20 | |

| Research Area: | Machine Learning | |

| Research Topic: | Informtion Geometry | |

| Level: | Ms | |

| Type: | Course, Thesis | |

| Status: | Active |